Master Validity and Assessment: Essential Guide for Accurate Measurement

When you're trying to measure something, there's one question that trumps all others: Is this tool actually measuring what I think it's measuring? This is the essence of validity. For any kind of assessment, from a job interview to a company-wide culture survey, this isn't just an academic thought experiment. It's the bedrock of any credible decision you make with that data. Without it, you're building on sand.

What Is Assessment Validity and Why It Matters

Think about it like this: Imagine you have a kitchen thermometer that always reads five degrees too high. You'd make all sorts of bad decisions, probably overcooking every meal you make. That simple mistake gets to the very heart of validity and assessment. An assessment is just a tool for measurement, and validity is our way of knowing if that tool is working right.

If an assessment isn't valid, the data it churns out isn't just worthless—it’s actively harmful. It can send you down the completely wrong path, leading to bad hires, ineffective training programs, and strategies that are doomed from the start.

The Real-World Impact of Poor Validity

The consequences of using an invalid assessment can be massive. This isn't just theory; it has real, tangible costs.

Consider these variable scenarios:

- Hiring Decisions: An invalid pre-employment test could accidentally filter out your best candidates or, even worse, greenlight people who are a terrible fit. Research by Schmidt & Hunter (1998) shows that valid selection procedures can significantly increase employee productivity, underscoring the high cost of invalid ones in terms of turnover and performance.

- Student Evaluations: A final exam that doesn't accurately test what was taught gives teachers and parents a distorted picture of a student's understanding. Educational plans based on that faulty data won't help anyone.

- Policy and Compliance: In critical fields like pharmaceutical manufacturing, the validity of safety and quality checks is everything. Agencies like the UK's Medicines and Healthcare products Regulatory Agency (MHRA) rely on valid assessments to certify compliance, which is essential for ensuring public safety and maintaining global trade.

Validity isn't a "nice-to-have" feature of a good assessment; it's the entire point. It’s the proof that your interpretations of the results are sound and meaningful for their intended purpose.

The Foundation of Trustworthy Measurement

At the end of the day, focusing on validity is about committing to smart, evidence-based decisions. It protects the integrity of your entire process, from how you word the questions to how you interpret the final scores. A common mistake that trips people up is writing confusing questions; reviewing some double-barreled question examples shows how easily this can create muddled, unusable data.

By making sure your assessment is valid, you're confirming that you are measuring the right things in the right way. This builds trust in your data and gives leaders the confidence to act, knowing their decisions are backed by accurate insights, not just guesswork. Establishing solid validity and assessment practices is the first, most important step toward getting measurement right.

The Three Pillars of Validity You Need to Know

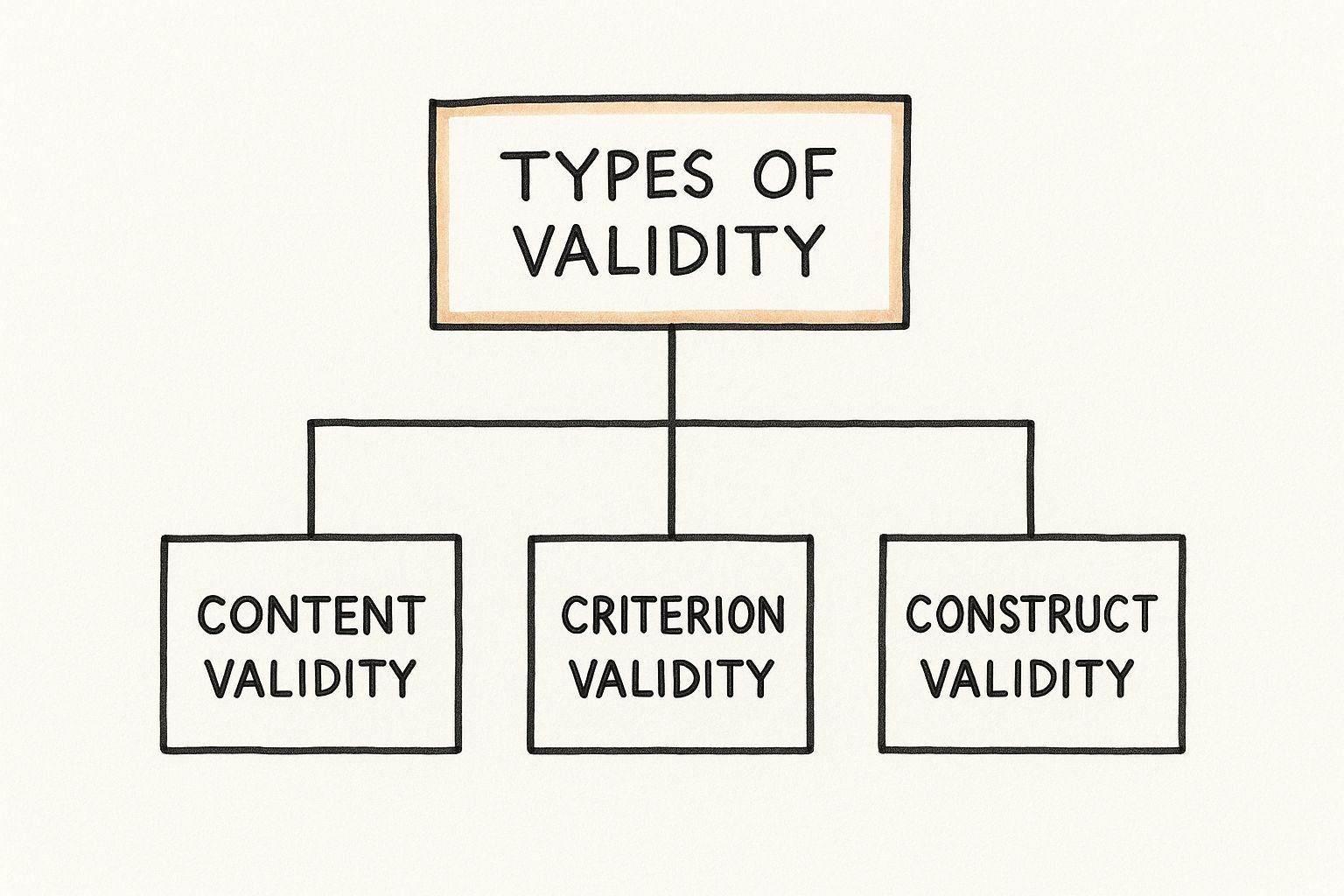

When you're trying to figure out if an assessment is any good, you need to look beyond the surface. The real measure of a test's worth comes down to a concept called validity. Think of it like a three-legged stool: for it to be stable and trustworthy, all three legs must be strong. These legs are known as construct validity, content validity, and criterion validity.

Each one answers a different, crucial question about how accurate and meaningful an assessment really is. Once you get a feel for these, you'll stop asking, "Is this a good test?" and start asking the right questions, like why it's good and what it’s actually useful for. Let's break them down.

As you can see, a truly solid assessment isn't built on just one type of proof. It needs evidence from all three pillars—content, criterion, and construct—to be considered truly sound.

H3: Construct Validity: The Foundation of Meaning

Let’s start with construct validity, which is really the heart of the matter. It gets right to the core question: Is this test actually measuring the abstract idea, or "construct," it claims to be measuring?

This is a big deal because most of the things we want to measure in people aren't simple, physical objects. You can't put a tape measure on concepts like intelligence, leadership potential, or creativity. These are complex constructs built from many different behaviors and traits.

Imagine you wanted to build a great test for "creativity." You can't just ask someone if they're creative. You'd have to measure several related components, such as:

- Their ability to generate lots of different ideas (divergent thinking)

- How they approach and solve unique problems

- The originality of their thinking

- Their willingness to take smart risks

If your "creativity" test only measured, say, a person's drawing skills, it would be missing the mark. It would have poor construct validity because it's ignoring all the other critical pieces that make up the bigger picture of creativity.

H3: Content Validity: Covering All the Bases

Next up is content validity, which is much more straightforward. This one asks: Does the assessment cover the entire range of material it's supposed to?

Picture a final exam for a year-long history course. If the professor only included questions from the first two weeks of class, that exam would fail the content validity test. The questions might be perfectly fine, but the test as a whole doesn't represent the full curriculum. It's incomplete.

A classic example is the exam you take to get a driver's license. To have strong content validity, it must test everything that goes into being a safe driver—knowing traffic laws, recognizing road signs, handling the car in different situations like parallel parking, and merging onto a highway. If it only tested your ability to park, it obviously wouldn't be a valid assessment of your overall driving skill.

This is just as important in the business world. For a cultural intelligence assessment to be effective, it can't just measure one or two aspects of your workplace. It has to cover all the key dimensions that truly define your organization's unique culture.

H3: Criterion Validity: Predicting What Happens Next

Finally, we have criterion validity, which is all about prediction. The key question here is: How well do the results of this assessment predict a specific, real-world outcome? This is where the rubber meets the road, connecting a score on a test to actual performance.

The SAT is a well-known example. Historically, colleges have used SAT scores because research demonstrated a correlation between higher scores and a student's first-year college GPA (the "criterion"). For instance, a 2019 College Board study found a correlation coefficient of .47 between SAT scores and first-year GPA. The test's power to help predict that future academic success is what gives it criterion validity.

In the corporate world, this is a game-changer. You might evaluate the criterion validity of a pre-hire sales assessment by tracking the new hires for six months. If the people who scored highest on the test consistently become your top-performing salespeople, then you know the assessment has high criterion validity. It's actually helping you find future stars.

The Three Pillars of Assessment Validity

To help you keep these straight, here’s a quick-reference table that boils it all down.

| Type of Validity | What It Measures | Key Question It Answers |

|---|---|---|

| Construct Validity | The underlying, abstract concept. | Does the test accurately measure the trait it's supposed to (e.g., creativity, intelligence)? |

| Content Validity | The full scope of the subject matter. | Does the test cover all the relevant parts of the topic (e.g., all driving rules)? |

| Criterion Validity | The predictive power of the results. | Do the test scores accurately predict a future outcome (e.g., job performance)? |

At the end of the day, these three pillars don't work in isolation. A truly well-designed assessment is built on a foundation of evidence from all three. This ensures that the results aren't just comprehensive and relevant but also genuinely meaningful and predictive.

How Validity Is Tested on a Global Scale

So far, we’ve talked about validity in relatively controlled settings. But what happens when you need to measure something across the entire globe? Imagine trying to create a single math test that’s just as fair and accurate for a student in Tokyo as it is for one in Toronto or Turku. This is the massive challenge at the heart of international large-scale assessments (ILSAs).

These high-stakes tests provide a variable case study on validity and assessment. They aim to compare educational performance across dozens of countries, but making that comparison meaningful is a monumental task. The core question always comes back to this: does the test truly measure the same thing everywhere?

The Challenge of Cross-Cultural Measurement

The biggest hurdle for any global test is making sure it measures the intended skill—the "construct"—consistently, no matter the language or culture. A seemingly simple math problem can be a minefield of cultural bias. The wording, the context, even the way the question is formatted can trip up students from different backgrounds.

Overcoming this requires an almost obsessive validation process:

- Careful Translation: You can't just run the questions through a translation tool. They have to be carefully adapted by teams of experts to ensure the meaning, nuance, and difficulty level stay exactly the same. This often involves a "translate and back-translate" method to catch any discrepancies.

- Cultural Adaptation: Any references that are common in one culture but foreign in another have to go. A word problem about baseball stats in the U.S. might become one about soccer in Brazil to maintain its relevance and clarity.

- Pilot Testing: Before the real test goes live, questions are tried out on smaller groups in every participating country. This helps researchers spot and eliminate confusing or biased items that didn't get caught earlier.

Even with all this work, the idea of a perfectly "culture-free" test is still debated among experts. This is why a solid grasp of validity is so important when you see the results. These same principles are also critical when building an organizational culture assessment guide for modern companies, because measuring culture across diverse internal teams presents a very similar set of challenges.

Here's the bottom line: don't take country rankings at face value. While these international tests provide incredibly valuable data, they aren't a simple scoreboard of which nation's education system is "best." They're complex tools that demand thoughtful interpretation.

The Problem with League Tables

Assessments like PISA (Programme for International Student Assessment) and TIMSS (Trends in International Mathematics and Science Study) have had a huge impact on education policy since PISA first launched in 2000. The media loves to turn their findings into "league tables," ranking countries from top to bottom.

But there are serious questions about the validity of using these rankings as a direct measure of a country's educational quality or its future economic prospects. For example, a 2013 paper by the Economic Policy Institute found little evidence that national PISA scores are correlated with economic growth. There are just too many other socio-economic factors at play.

This is where a simplistic view can be dangerous. A government might see its country slip a few spots in the PISA rankings and immediately try to copy the curriculum of a top-performing nation. But this rarely works, because it ignores the deep cultural and social differences that make a direct transplant impossible. The data should be a starting point for asking questions, not a final answer.

A Deeper Look at What Scores Mean

Ultimately, the validity of a global assessment isn't just about the test itself; it’s about how people use the results. A valid interpretation means acknowledging the data's limitations. For example, a country’s average score could be pulled up or down by factors that have nothing to do with the classroom, such as:

- Socio-economic Disparity: High poverty rates can have a huge drag on student performance, but a math test doesn't measure that.

- Student Motivation: In some cultures, students take these tests very seriously. In others, not so much. That can definitely affect the scores.

- Curriculum Alignment: The test might just happen to line up better with what’s taught in one country’s schools compared to another’s.

Understanding these variables is what a mature approach to validity and assessment looks like. It shifts the conversation from a competitive "Who won?" to a more constructive "What can we learn from this?" This allows policymakers to use ILSA data as a mirror for reflection, not a weapon for political fights.

Ensuring Fairness and Reliability Across Cultures

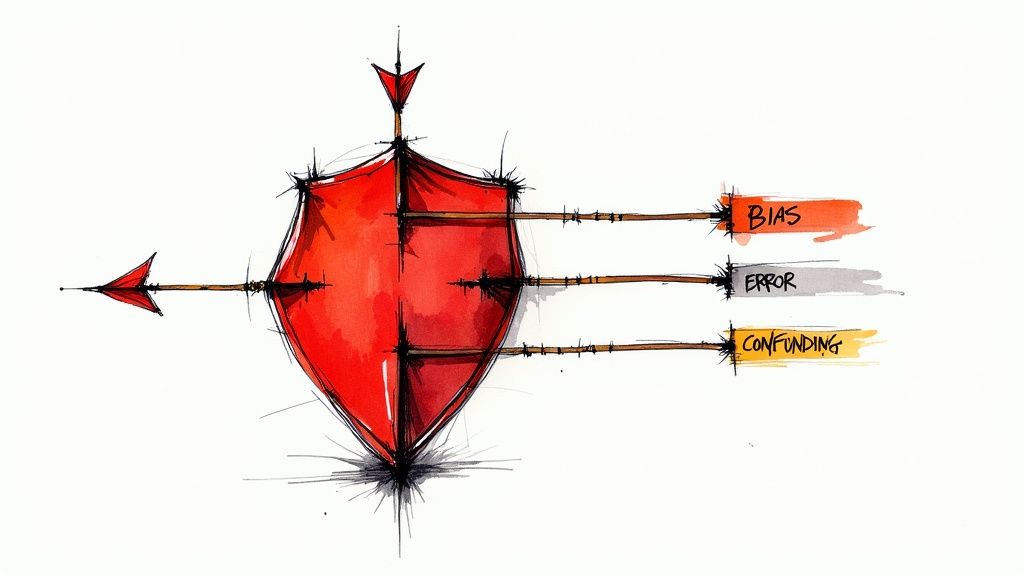

For any assessment to be worth its salt, especially on a global scale, it has to clear two major hurdles. We often call these the 'twin imperatives': reliability and validity. While validity is about measuring the right thing, reliability is about getting the same result every single time you measure it.

Let's use an analogy. Think of an archer. Validity is aiming at the correct target. Reliability is hitting the same spot with every arrow, shot after shot. A reliable but invalid assessment is like an archer who consistently hits the tree just left of the target—the shots are grouped tightly together, which is consistent, but they completely miss the point. Inaccurate.

Achieving both requires a painstaking, almost obsessive commitment to fairness, especially when your audience spans different cultures, languages, and backgrounds.

Building Trustworthy Global Assessments

Organizations like the International Association for the Evaluation of Educational Achievement (IEA) have spent decades perfecting the science of fair global measurement. For them, validity and assessment are everything. Anything less would render cross-country comparisons completely meaningless. They’ve pioneered incredibly rigorous quality control procedures to stamp out error and bias at every single stage.

This meticulous process involves several critical steps:

- Culturally Sensitive Design: They carefully vet every topic and question to make sure they're understood and relevant across all participating countries. This means avoiding idioms or cultural contexts that might give one group an unfair advantage.

- Strict Population Sampling: To make fair comparisons, you need to test truly representative groups. The IEA uses sophisticated sampling methods to ensure the students selected accurately reflect their national student populations.

- Intensive Data Verification: Once the data comes in, it's not just accepted at face value. It goes through multiple rounds of checks and adjudication to spot and correct any anomalies or errors that could skew the results.

This sophisticated balancing act—adapting tools for different cultures without losing the ability to compare them—is the very heart of ensuring both reliability and validity. You can explore a deeper dive into these complex quality control procedures and why they are so essential for global assessments.

Reliability Beyond the Test

While an assessment must be valid, its reliability is just as crucial. You need consistent results over time. This principle of consistency isn't just for tests; it's vital in business operations too, like increasing consistency in service delivery to provide fair and predictable customer experiences.

Just as a customer expects the same quality of service no matter which agent they talk to, an organization must trust its assessments to produce stable, dependable data. If a tool gives you wildly different results for the same group under similar conditions, it's unreliable. You can't trust that data to make important decisions.

Practical Steps for Ensuring Reliability

So, how do you actually make an assessment reliable? It all comes down to eliminating as much randomness or "noise" as you can. Clear instructions, unambiguous questions, and standardized scoring are absolutely non-negotiable.

Here's the bottom line: an assessment cannot be considered valid if it is not first reliable. Reliability is a prerequisite for validity. After all, if your measurements are all over the place, they can't possibly be accurate.

Think about these practical ways to boost reliability:

- Standardize Administration: Make sure every single participant takes the assessment under the exact same conditions. That means the same time limits, the same instructions, and the same environment.

- Clarify Scoring Rules: For any open-ended questions, create a detailed scoring rubric. The goal is to ensure two different raters would score the same response in precisely the same way.

- Pilot Testing: Before you go all-in, test the assessment on a small, representative sample. This is your chance to find confusing questions or instructions that could create inconsistencies.

By focusing on these practical elements, you can build assessments that are not only valid—measuring what you actually intend to measure—but also highly reliable. This dual focus ensures the data you gather is a trustworthy foundation for making critical decisions about people, policies, and performance, no matter the cultural context.

How Validity Applies to Organizational Culture Assessments

It’s one thing to talk about theoretical concepts like validity in a textbook, but it's another thing entirely to see them become powerful tools for solving real-world business challenges. This is especially true when you're trying to measure something as complex and nuanced as organizational culture.

How do you really quantify something like ‘psychological safety’ or a team's ‘readiness for innovation’? It takes more than a simple survey. It requires an assessment built on a rock-solid foundation of validity and assessment principles.

Just asking your employees if they feel "innovative" barely scratches the surface. A truly valid culture assessment has to dig much deeper, connecting those abstract cultural values to concrete, observable behaviors. This is how leaders move past gut feelings and start using reliable data to understand their organization's health and drive real, strategic change.

Bringing the Three Pillars of Validity into the Boardroom

Let's pull those three pillars of validity—content, construct, and criterion—out of the academic world and put them to work. A scientifically-grounded platform like MyCulture.ai is a perfect example of how these principles are applied to build a culture assessment that actually means something.

Content Validity: Think of this as comprehensive coverage. For an assessment to be complete, it needs to look at all the crucial dimensions that make up your company’s unique culture. This means going beyond a few high-level values and digging into things like communication styles, collaboration norms, leadership behaviors, and how your teams handle conflict. If an assessment only measures one or two things, it lacks content validity and gives you a dangerously incomplete picture.

Construct Validity: This one is arguably the most important for culture. A valid assessment has to accurately measure the underlying psychological concepts that shape how people behave at work. To measure "psychological safety," for instance, the assessment can't just ask about safety. It has to probe the related factors that create it, like trust in leadership, how comfortable people are expressing a dissenting opinion, and what they believe the consequences are for making a mistake.

Criterion Validity: Here's the ultimate test: do the assessment results actually predict real-world outcomes? Evidence shows a clear, strong connection between high scores in certain areas and positive business results. For instance, a Gallup meta-analysis found that business units with high employee engagement (a cultural indicator) have 81% less absenteeism and 18% higher productivity than those with low engagement.

Turning Data Points into a Plan of Action

When an assessment is properly validated, it turns fuzzy feelings into a clear roadmap. Once you can trust the data, you can see exactly where your culture is thriving and where it needs some help. For example, effective culture assessments often evaluate specific behaviors, and applying these validity principles ensures the insights are meaningful. You can find excellent strategies to improve workplace communication that can help you turn those insights into real improvements.

A validated instrument gives leaders the confidence to act. Instead of guessing why a team is underperforming, you can see clear data pointing to a breakdown in communication norms or a lack of alignment on core values.

This level of detail is what allows for targeted, effective action. You can stop launching generic, company-wide "culture initiatives" and instead focus your time and resources where they will make the biggest difference. This data-driven approach is also incredibly valuable for bringing new people on board; you can read more about using these tools to find the right fit in our post on harmony in hiring with culture assessments.

The Power of a Validated Platform

Using a validated platform like MyCulture.ai isn't just about getting data; it's about getting a powerful diagnostic tool. It ensures the questions you're asking are not only relevant (content validity) but are also precisely measuring the invisible forces that shape how your teams actually work together (construct validity). This is what allows organizations to build a more connected and productive workplace based on solid evidence, not just wishful thinking.

At the end of the day, rigorous validity and assessment practices are what separate a genuinely helpful tool from a simple box-ticking exercise. By making sure an assessment is valid, leaders can be certain they are getting an accurate reading of their organization's health, giving them the power to make smarter decisions that build a stronger, more resilient culture.

Common Questions About Validity and Assessment

We've covered a lot of ground on the world of validity and assessment, so it's natural for a few questions to pop up. These ideas are powerful, but let's be honest, they can sometimes feel a bit abstract. This is where we clear things up, tackling the most common uncertainties head-on with practical, straightforward answers.

Think of this as our final check-in, making sure the key concepts are crystal clear. Nailing these details is what elevates an assessment strategy from just "good" to truly great.

Can an Assessment Be Reliable but Not Valid?

Yes, absolutely. This is one of the most important distinctions to grasp. Reliability is all about consistency, whereas validity is about accuracy. They are not the same thing.

Here’s a simple way to think about it: Imagine a bathroom scale that’s off by ten pounds. Every morning, it tells you that you weigh exactly 10 pounds less than you really do. That scale is highly reliable—it gives you the same consistent result every time—but it is not valid because it isn't accurately measuring your actual weight. The data, while consistent, is useless for tracking your health.

The same exact principle applies to assessments. A pre-hire test could give a candidate the same score every time they take it, but if that test isn't measuring skills that actually matter for the job, it’s not valid. It's just consistently measuring the wrong thing, making it a poor tool for any hiring decision.

How Is Validity Different from Fairness in Testing?

This is another critical point of confusion. While validity and fairness are closely connected, they are two separate concepts that look at an assessment from different angles.

Validity is a technical question: Does this test accurately measure what it says it measures for everyone who takes it? For example, does a math test truly assess mathematical ability for all students, no matter what their primary language is?

Fairness is a much broader, ethical idea. It’s concerned with the social impact of using the test scores. It asks if the assessment process is free from bias and if the results are applied in a just, equitable manner.

A test can be technically valid but still be used unfairly. For instance, using a perfectly valid cognitive ability test as the only factor in a hiring decision could be unfair if it systemically screens out great candidates who have tons of relevant skills and experience but come from different educational backgrounds. To learn more about building equitable hiring processes, check out our guide on reducing hiring bias with AI tools and an evidence-based approach.

What Is Face Validity and Is It Important?

Face validity is the most informal type of validity out there. It boils down to a simple gut check: At first glance, does this assessment look like it's measuring what it's supposed to?

For example, asking an administrative assistant candidate to complete a typing test has high face validity. It seems directly relevant to the job. But asking that same candidate to solve complex physics problems would have extremely low face validity—it would feel random and pointless.

While face validity isn’t strong scientific proof like construct or criterion validity, it’s incredibly important for one practical reason: participant buy-in.

If people taking a test don't believe it's relevant or fair, their motivation can sink. They might not take it seriously, which can throw off the results and undermine the assessment's overall reliability and validity. When an assessment looks credible, it encourages honest and engaged participation.

How Often Should Validity Be Re-Evaluated?

Validity isn’t a one-and-done certificate you hang on the wall; it’s a living process. An assessment that’s perfectly valid today might lose its edge in a few years.

Treat it like software that needs regular updates to stay effective. A job's core responsibilities can change, the demographics of your talent pool can shift, and new research can provide better methods. Because of this, it's crucial to re-evaluate your assessments from time to time.

A good rule of thumb is to review your key assessments every few years to confirm they still have strong criterion validity—that is, they still accurately predict on-the-job performance. In fast-moving industries, you might need to do this even more frequently. This commitment to ongoing validation is what keeps your tools for validity and assessment sharp, relevant, and effective for the long haul.

Ready to build a stronger, more cohesive team with data you can trust? MyCulture.ai offers a science-backed platform that ensures your culture and skills assessments are built on a solid foundation of validity. Move beyond guesswork and make hiring decisions with confidence by visiting https://www.myculture.ai to learn more.